Bias in writing museum artifact descriptions. Support for advising Goizueta Business School students. Protection of artistic copyrights. Car accidents at dangerous intersections. Obesity in Atlanta.

These topics may seem to have little in common on the surface. But in the Center for AI Learning’s AI.Xperience program, they each converged with artificial intelligence (AI) as the subjects of research projects led by Emory students.

For several weeks during the summer, 44 graduate and undergraduate students hailing from a variety of disciplines within Emory immersed themselves in data science projects proposed by schools and units across campus.

After soliciting project ideas and gauging interest from faculty and staff, Tommy Ottolin, senior program coordinator at the Center for AI Learning, presented the projects to the students. The students then ranked their project preferences and were matched and connected with the supervising faculty members.

“We wanted this program to give everyone the opportunity to learn more about data science and artificial intelligence,” said Ottolin. “We wanted them to apply it to a real-world concept and walk away from the summer with a fascinating experience where not only did they learn more about these concepts that they may or may not have seen within the confines of a classroom, but they also have become subject matter experts in niche applied fields of AI, which will help them professionally and academically.”

After being matched with their projects, the teams were off to investigate their topic and create a tangible output by the end of the summer. Ottolin and co-director Kevin McAlister, assistant teaching professor in qualitative theory and methods, introduced students to their project leads. From there, it was up to the students to dictate their work, and the faculty lead to guide the group and assist them where necessary.

In a world where AI might often seem scary or untamable, Ottolin said the result and passion of the AI.Xperience projects directly counter that narrative and offer positive implications for each respective field.

“All of these AI.Xperience projects are unique applications of AI and data science, and they all have produced interesting work in meaty and topical areas. I hope that we can show off the potential in a wide range of fields, and I hope that the program can inspire others on campus and beyond to look inward to their teams and question how they could heighten the potential for their skills if they married them with the newest technologies available to everyone,” said Ottolin.

When reflecting on the summer, Ottolin praised the students for their boldness in completing projects in diverse subject areas that have the potential to impact many people.

“It goes back to the goal of Emory’s AI.Humanity initiative,” Ottolin said. “We know the university has incredible expertise in business, law, arts and humanities, health and the social sciences, and we know that we have a deluge of humanistic thinkers who can think about the real-world implications of things. If we pair that with the emerging technologies, we can accomplish this higher level of accomplishment that isn’t accessible from either camp in a silo.”

Ottolin pointed to one pleasant surprise from the summer: the connections built between students.

“I think we’re building a community on campus of folks who are genuinely interested and excited, and that is literally all you need to get involved with the Center for AI Learning,” he said.

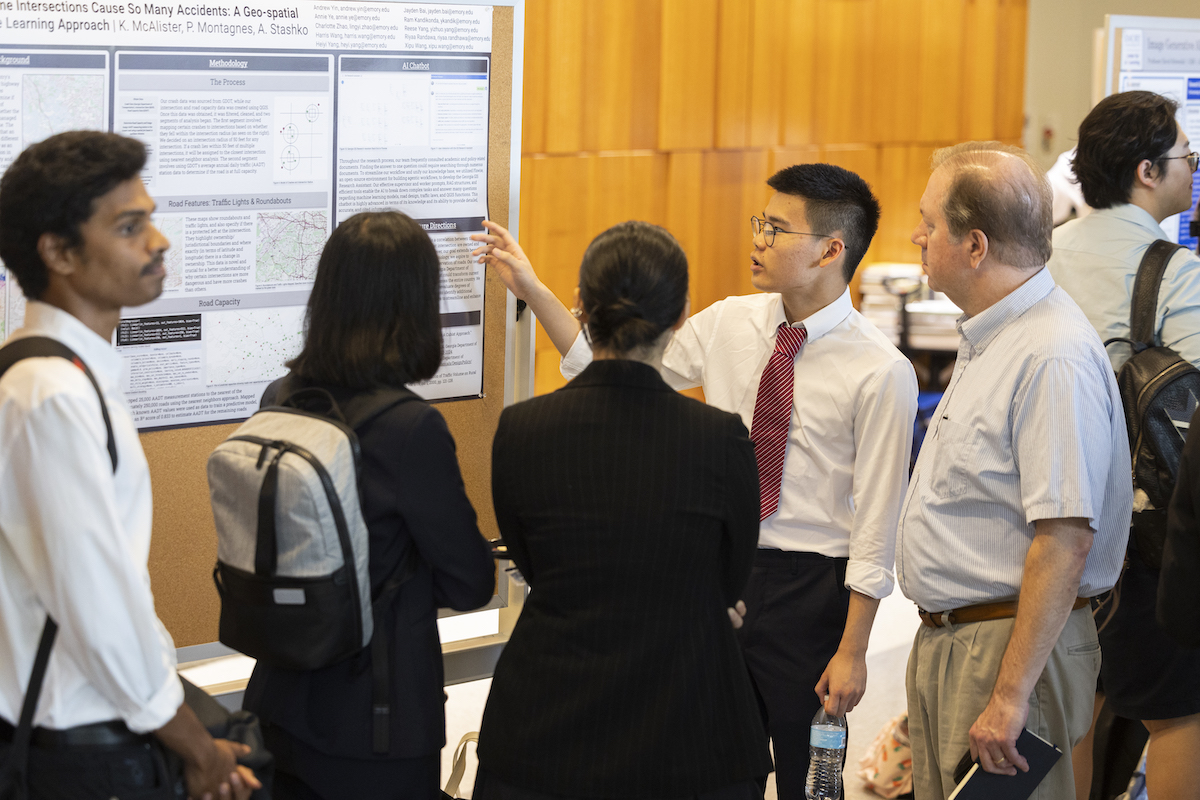

As summer drew to a close, student participants, Center for AI Learning staff, faculty and supporters gathered on campus to see the hard work and effort of each student group through oral and poster presentations.

Click the titles to learn more about each project and the students and faculty who participated.

Bias in museum artifact tagging

The Michael C. Carlos Museum on Emory’s campus displays thousands of artifacts for visitors to enjoy. Descriptions of each piece accompany the artifacts, offering vital information such as the time period, a few paragraphs about the piece, the medium and other details.

The AI.Xperience group discovered that some descriptions hold a bias, and they used AI to evaluate the museum catalog for the presence of bias. Identifying four types of potential bias in descriptions — subjective, jargon, social and gender bias — the group extracted data from the Carlos Museum’s descriptions. After utilizing language models to sort the biased terms in the selected group of descriptions, they trained a GPT (generative pre-trained transformer) to identify both biased and unbiased text. Utilizing another GPT, the group could input biased text and receive suggestions for alleviating bias.

Raasikh Kanjiani, a third-year student studying computer science and minoring in math, expressed his appreciation for AI.Xperience, which immersed him in an impactful project aligned with his academic passions.

“I was interested in the technical side of this project, the challenge of applying computer science to a field that is super relevant, and it was an interesting project that I’d never seen discussed previously,” Kanjiani said. “I didn’t know AI.Xperience was an opportunity I had at Emory, and taking advantage of it has helped me learn a lot.”

Todd Lamkin, director of collections services and chief registrar at the Michael C. Carlos Museum, was one of two project leads for this group, advising students and meeting weekly to check progress and answer questions. For him, the project aligns with heightening accessibility in learning spaces.

“Museums are for all,” Lamkin said. “A key task of 21st-century museums is to make their collections accessible to everyone. A great way to put this promise into action is to describe the collection in language that is understood by all and that avoids biased perspectives. This project is an important step toward this goal.”

Advising students in Goizueta Business School

Goizueta Business School includes approximately 1,300 undergraduate bachelor of business administration (BBA) students. To serve and guide those students in requirements for their major and selection of more than 90 related course offerings, there are 14 academic advisors.

The AI.Xperience group asked: How can AI be leveraged to enhance student advising? Expressing the challenges of traditional advising, the group pointed to jams during peak advising periods, difficulty in parsing program requirements and the high student-to-advisor ratio as reasons why AI should be utilized to address these conflicts.

Utilizing the course atlas, syllabi, BBA catalog and other resources, the group trained an AI chatbot, creating an on-demand way for students to ask questions about their schedule and course requirements. Wen Gu, associate professor in the practice of information systems and operations management, proposed the project and served as the group’s faculty lead. Gu said that due to the constant evolution of curriculum and student needs, advisors face an uphill challenge in staying informed.

“Our AI-powered academic advisor chatbot is designed to bridge this gap, empowering advisors with up-to-date, relevant information. This will not only ease their workload but also significantly enhance the quality of advising and, by extension, the student experience,” said Gu. “This tool will revolutionize the way academic advisors operate, significantly enhancing the speed and quality of their work.”

Chris Paik, a third-year business student, saw this project as an opportunity to combine his interest in education and his desire to learn more about the practical applications of AI.

“It was enlightening to learn more about what goes into advising, and the potential to make that process better for everyone through this project is exciting,” said Paik. “I’m also interested in learning more about AI. It’s been engaging to utilize AI to put something to practice and make something meaningful out of it.”

Generative AI image corruption

When users enter prompts into AI image generation models, the resulting images may seem like entirely new work. The reality, though, is that many generative AI models use images from the internet without the artist’s consent or regard for compensation. In other words, the software is using unlicensed images to create new art.

A group of AI.Xperience students used data to study the effectiveness of NightShade, a program that distorts images fed into AI models for training, inhibiting the AI’s ability to comprehend prompts and create content based on the prompts. Selecting a group of Claude Monet paintings as their test group, students “poisoned” the images using NightShade, then used an AI model to evaluate the image quality and compare the altered and pure images. The students found that NightShade’s impact on visual aesthetics is small and acceptable — just enough to poison the image and distort it from the original.

David Schweidel, professor of marketing at Goizueta Business School, served as the faculty lead for the AI image generation group.

“Generative AI requires massive data for development, but we don’t often talk about the fact that the data are produced by individuals,” said Schweidel, noting the importance of this project. “Whether we’re talking about journalists, musicians, authors or visual artists, they never consented to their work being used in this way. Being able to measure how much an individual’s work contributes to a generative AI model’s performance is a necessary step in understanding the value it creates.”

Schweidel said the Emory students involved in this project not only got a chance to sharpen their skills but also to consider the direction AI is headed.

“It’s great to see students embrace problems that have far-reaching consequences. I think the project not only gave them additional training in their technical skills, but also required them to grapple with their own level of comfort with how AI systems are developed. These are exactly the types of issues that students should be confronted with so that they can decide where they stand on such topics,” said Schweidel.

Tom Suo, a third-year student studying applied math and statistics, said he was drawn to the project to diversify his experience with AI.

“Working with generative AI, as opposed to geospatial analysis and chatbots, interested me the most because it was new to me, and I wanted to try something different this summer,” Suo said. “In the future, I think this work can apply to copyright laws and the legal world.”

Obesity in Atlanta

Though some projects began as a hyper-localized look at one particular issue, the project investigating factors influencing obesity in Atlanta grew out of an article published by Xiao Huang, assistant professor in the Department of Environmental Sciences, in which he and other co-authors explored obesity across the United States.

“I thought it would be a great idea to extract data specifically from the metro Atlanta area and give it to the students. This way, they could work with real research data and apply cutting-edge AI modeling techniques,” said Huang, who also served as the project lead for the team.

Throughout the summer, the group utilized machine learning methods to identify the primary influences of obesity around metro Atlanta, how these factors influence obesity outcomes and offer policy suggestions based on those results. The group discovered that behavioral factors, such as lack of sleep and physical activity, outweigh sociodemographic and environmental factors. These findings suggest the critical role that lifestyle plays in determining obesity.

Huang noted how the model created by this AI.Xperience team is applicable beyond obesity.

“Cutting-edge, explainable AI technologies can help uncover the intrinsic relationships between contributing factors and outcomes,” he said. “This project uses obesity as a case study, but AI models can certainly help predict other disease outcomes as well.”

Sana Ansari, a fourth-year student studying quantitative sciences and public policy, derived many lessons from the project over the summer. She gravitated to this project thanks to her major and a previous internship focused on factory farming. Applying AI to her existing understanding of how food contributes to health problems made for a fascinating summer.

“I’ve always been interested in public health and public policy,” Ansari said. “I knew I would enjoy this project because I understood the potential impacts.”

Car crashes at dangerous intersections

Anyone who has driven around Atlanta has probably passed a familiar scene at an intersection: a car crash. One AI.Xperience group set out to understand what makes intersections dangerous by investigating if intersections involving roads with different jurisdictional ownerships have a higher frequency of crashes. This group gathered data about crashes and created a chatbot to answer questions about road planning, maintenance and legality.

After compiling data, the group’s hypothesis was confirmed. Indeed, intersections at jurisdictional boundaries — such as city and county roads — did have more crashes due to inconsistent maintenance and design issues. The group discovered that the intersection with the greatest number of crashes was at I-85 and Pleasantdale Road.

Pablo Montagnes, associate professor of political science and quantitative theory and methods, noted how impactful the findings of this project could be in reducing deaths and injuries due to traffic accidents.

“Traffic accidents are some of the most tragic forms of death and injury, especially because they often affect young people. I've lost dear friends in their 20s to car accidents, and their loss is deeply felt,” said Montagnes. “There are known improvements that could be made to our roads, yet progress seems stalled. This project attempted to explore one possible reason for this lack of progress.”

Montagnes was impressed by the AI.Xperience group’s dedication to addressing these challenges head-on by utilizing AI.

“Seeing the students so enthusiastic about contributing to a real-world problem like traffic accidents — and recognizing that their work could eventually lead to meaningful change — was truly inspiring. They were full of ideas, and the scope and depth of the project expanded throughout the summer,” Montagnes said.

Riyaa Randhawa, a third-year student studying computer science and minoring in business, agreed with Montagnes’ assessment of the profound potential impact of their findings.

“I thought this project could make real change, through working with government entities. I’ve driven around Atlanta, so I’ve seen the conditions of roads and crashes myself. I felt that this project was the perfect combination of learning and implementing real-life solutions,” said Randhawa.

Reflecting on her time in AI.Xperience, Randhawa said she enjoyed not only the subject matter, but also the format and experience she gained in the program.

“I really liked how the pace of the project, our goals and outcomes were all reliant on us as students. It gave me a glimpse of potential job roles that I could see myself in later on,” she said. “AI.Xperience made me feel a lot more connected not only to the professors here but also to the Center for AI Learning. It excited me to see the number of connections they had in the community and across Emory.”