Cancer researchers have a multitude of tools to study tumors. Histological staining uses dyes to make different kinds of tissue cells visible in microscopic slide images. CT scans can pinpoint the size, location and spread of a tumor. Epigenetic analysis can track a cancer’s growth and genetic regulation.

“These different lenses — macroscale and microscale — really provide different perspectives on the same tumor,” says Anant Madabhushi, executive director for the Emory Empathetic AI for Health Institute as well as a researcher at the Winship Cancer Institute.

What if you could use artificial intelligence (AI) to break down the boundaries and combine different types of images to yield deeper insights into cancer risk and prognosis?

That’s the goal of four recent studies that promise to produce more accurate cancer risk assessments. Nabil Saba, professor of hematology and oncology in the School of Medicine who collaborated on three of the four studies, says the application of AI to cancer diagnosis is “revolutionizing everything we do.”

“If we look at these tools individually, we're missing the bigger, more comprehensive portrait of the tumor,” says Madabhushi, senior researcher on all four projects. “It's only when we start to converge these different scales of data — at the radiographic scale with the cell scale and the microscopic scale — that a true, comprehensive portrait of the complexity of the tumor starts to arise. That’s very important from a translational standpoint because it allows us to understand how the tumor is behaving.”

Focusing on head and neck cancers

The four projects all focus on head and neck cancers, particularly oropharyngeal tumors — cancers of the throat. Madabhushi says these are growing at epidemic proportions and exhibit complexities that might benefit from the insights provided by AI.

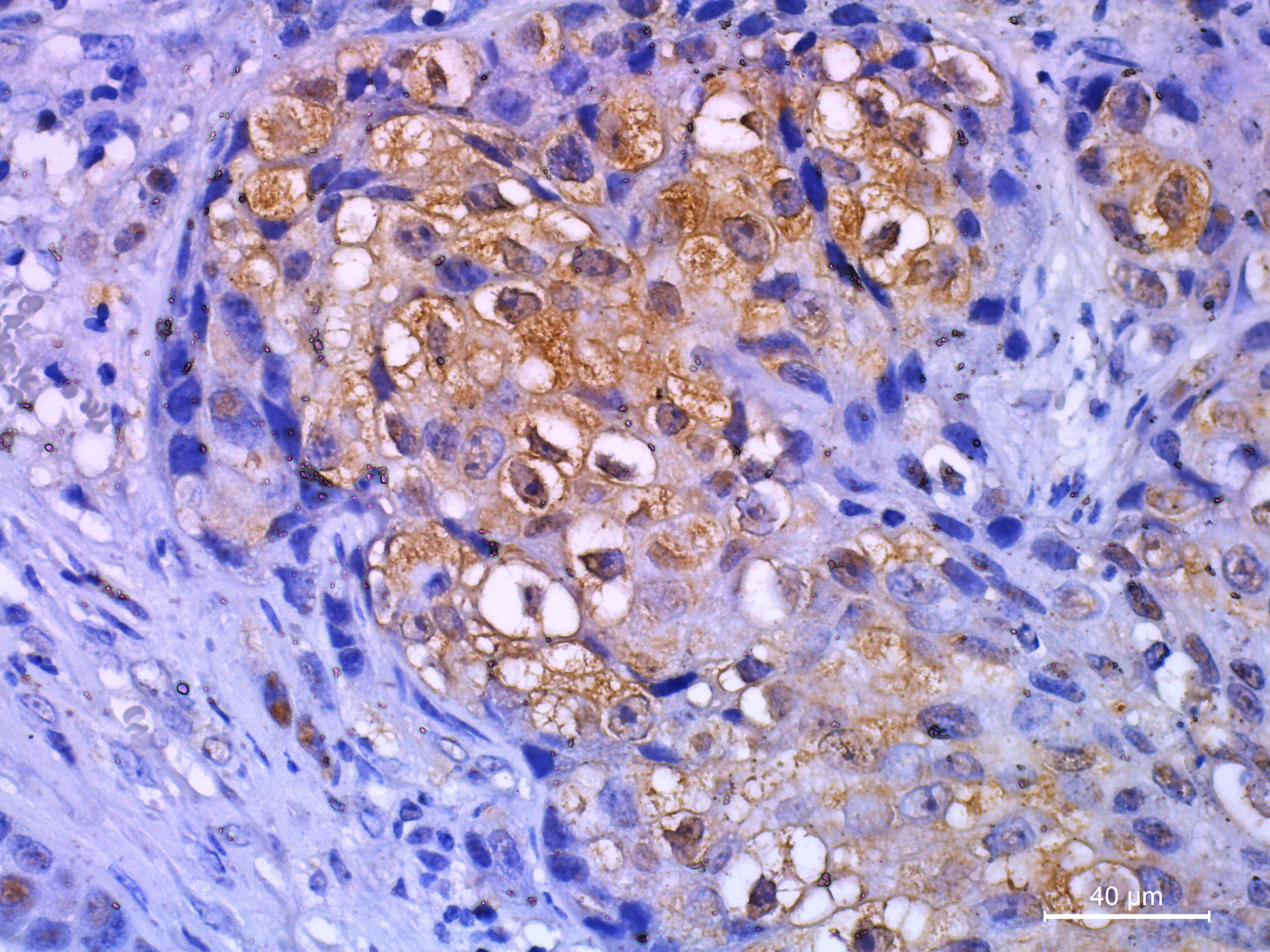

Histological slide stain image

Getty Images/Nilrat Wannasilp

“Head and neck cancer is really a combination of multiple tumors,” he says. “If they occur in the mouth, those are oral cavity tumors. Then you've got tumors of the oropharynx or oropharyngeal tumors. There’s a whole plethora of different tumors based off the site of incidence within the head and neck.”

Four teams of researchers used a range of AI models to analyze diverse data. For several cancers, immunohistochemistry, a form of tissue staining that uses antibodies, is often performed to detect and visualize antigens — or the proteins that trigger immune responses.

For instance, IHC is used to identify tumor associated macrophages (TAMs), white blood calls associated with tumors. One group developed and used an AI platform called VISTA to transform standard, microscopic-stained hematoxylin and eosin stained (H&E) tissue slides from patients with throat cancer into virtual IHC slides that in turn led them to find TAMs.

“These tumor-associated macrophages have a strong prognostic role in a number of different cancers,” Madabhushi says. “They’re very difficult to identify on a standard H&E tissue slide. By taking this approach, we teased out something that you really need special glasses to see.”

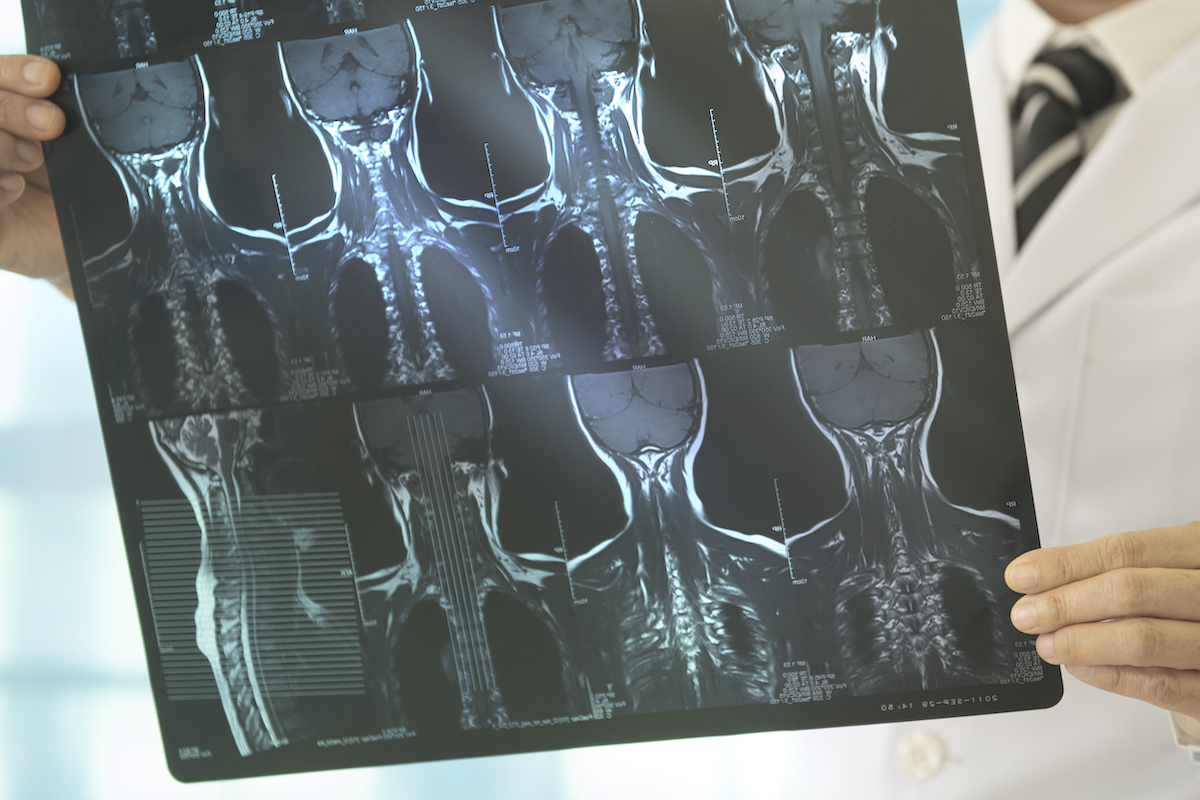

Studies two and three both used a machine learning program called a swin transformer to merge different kinds of data. Study two combined data from pre-treatment CT scans of primary throat cancer tumors, using attributes and features extracted from both the primary tumor as well as lymph nodes in the neck. The combination of features from both the tumor and the lymph nodes on the pre-treatment CT scans are highly associated with long term prognosis of head and neck cancer.

CT scan of a malignant lung tumor

Getty Images/kalus

In study three, investigators modified the swin transformer into a model called swin transformer-based multimodal and multi-region data fusion framework (SMuRF), that let them switch seamlessly between two dimensional H&E tissue slide images and 3-D radiological images that also function at different scales. Combining the different types of images let them integrate CT scans of both the primary tumor and lymph node with microscopic slide images of the primary tumor.

“You've got the microscopic scale, and now you've gone to the macroscopic scale,” Madabhushi says. “But what’s really interesting is that this swin transformer approach allowed us to tease out subtle patterns of the tumor and combine representations from both these different regions. We were then able to predict, not just patient survival — we were also able to identify specific head and neck cancer patients who are truly going to benefit from chemotherapy.”

Prof. Anant Madabhushi

The fourth project went a step beyond combining images, to link slide images with epigenetic data about cancer. Head and neck tumors come in many different shapes and sizes, which complicates diagnosis and treatment. Using a new model called pathogenomic fingerprinting, the researchers were able to link the visual architecture of tumor cells in slide images with patterns of genetic control that are believed to shape the development of the tumor itself.

“Having a more molecular understanding of the epigenetics of the tumor goes a long way in increasing our understanding of what the tumor is at a cellular level,” says Madabhushi. “We were able to bridge those two worlds of the tissue and corresponding epigenetics of the tumor.”

Improved cancer risk assessments

All four studies were aimed at better classifying patient risks.

“Which of these tumors are more aggressive and going to progress? Which ones are less aggressive and may not progress as much?” as Madabhushi puts it. “To develop actionable tools that can be used by the clinician to make patient interventions.”

Prof. Nabil Saba

In all four studies, the combined data produced cancer risk assessments that matched or outperformed assessments based on any single data source. Despite these promising results, coauthor Saba, the Halpern Chair in Head and Neck Cancer Research at the Winship Institute, believes it’s important to move cautiously before trying to translate AI’s performance into the clinic.

“I think we're in a stage of understanding what can be done,” he says. “The question is how to do it. That will take time. When you generate large volume of data, there's a chance that this data may overlook other aspects that are important to patient care. It's good to generate data, but then this data has to ultimately help the individual patient. The key is how to actually analyze the data in the context of patient care to provide the best treatment possible.”