Azra Ismail was born in India’s capital city of Delhi and grew up in Abu Dhabi, but with frequent visits to her native town in Bihar, one of the poorest states in India. “I grew up seeing intense disparities ... seeing the haves and have nots,” she says.

Prof. Azra Ismail

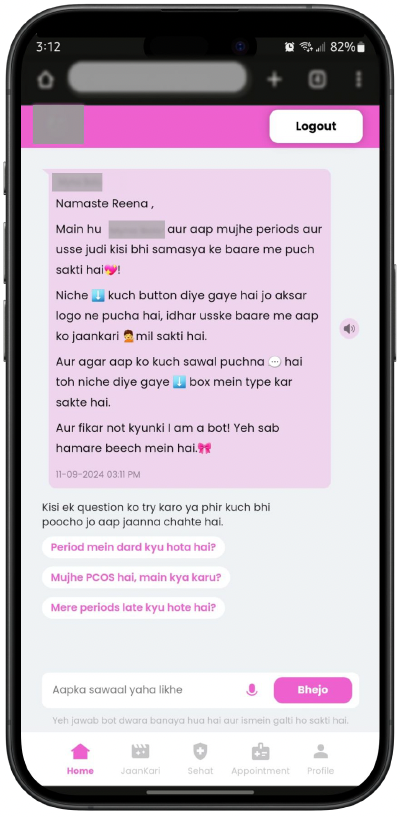

Much of Ismail’s work has to do with finding ways to improve access to maternal and child health, especially for women from underserved communities. She collaborated with the Myna Mahila Foundation, an Indian-based nonprofit working to improve women’s health and its founder, Dr. Suhani Jalota, to develop a mobile-based AI chatbot providing support for sexual and reproductive health, including information about issues like family planning, pregnancy care and even basic reproductive anatomy. But adapting an AI model trained on data from developed countries for people in a completely different technical and cultural environment is a hard task.

“It comes down to issues with language, and challenges with ensuring that these technologies are culturally appropriate” Ismail says. “If you're able to access health information from your home, that's reliable, accurate and timely, that can have a potential impact on your health. But to get to that point where information is understood by the end user in the way it was meant to be, is a demanding task.”

Developing the right model

The task of delivering better health care information began with extensive field work to learn about the kinds of questions the target audience of women had and the issues they were experiencing in sexual and reproductive health. Ismail and her team wanted to know how specific these are to a particular community. When do they translate to other communities? “You also don't want to build something so specific that it only meets the need of one community,” she notes.

“It's not just about the data,” Ismail says, “but also creating the kind of prompt that's going to result in the type of response you want — making sure the tone is appropriate and empathetic and contextualized for that community. If you're giving nutrition information, making sure you're offering food sources that are locally relevant. If you're offering a suggestion on social support in pregnancy care, making sure you're aware of gender norms and family norms. If you're offering information about contraceptive use, being cognizant of the gaps in this community around using certain kinds of contraceptives.”

Ismail says her multicultural background helped her recognize the challenges people face when trying to access health care through technology created in developed countries that may not translate easily to other cultures. This raises deeper questions about how large language models need to be adapted in order to function in the developing world.

“I’m cautiously optimistic that we'll see more equitable outcomes and approaches as different countries build their own language models,” she says. “We're seeing a lot of efforts for Indian large language models being built by Indian researchers in India to support languages that are not well supported by ChatGPT, for instance. There's a long road to get there, but we're starting to see countries take their own positions on these issues and build AI plans and strategies that align with their region’s needs.”