EMORY MAGAZINE | SPRING 2023

THE AI REVOLUTION IS HERE

The Rise of Chatbots and Other Ways Artificial Intelligence Is Changing Our Lives Right Now

We have long taken for granted how much artificial intelligence (AI) influences our daily lives. From the facial recognition that unlocks our phones in the morning to the smart thermostat that keeps our homes comfy throughout the day to the algorithm that suggests what we watch on TV at night, the technology has largely been smooth, unobtrusive, and welcome.

However, AI seems to have recently taken a big leap in evolution — at least in the public eye — and appears more intelligent than ever. Groundbreaking developments such as sophisticated chatbots, smart search engines, and stylistic art generators (including those uncanny deep fake emulators) have finally caught our attention.

AI is now talking back and showing something of a mind of its own.

For the millions who have tried AI apps during the past year, playing with these new tools has been fun (as we rushed to create cool profile pictures for our Facebook accounts), frustrating (as we realized we are essentially beta testing the early and buggy iterations of this technology), and more than a little bit unsettling (as we learned how closely AI could mimic and outright steal human work and creativity).

Experts in the field, including a growing corps of Emory faculty and staff hired for their AI knowledge, have been closely watching these advancements unfold. They are optimistic about how artificial intelligence can ultimately enhance our lives but concerned about it being used ethically.

These teachers and scientists study the tech across a wide range of disciplines as part of Emory’s AI.Humanity initiative, a university-wide effort to make AI a foundational part of its curricula and research. Hailing from medicine and public health to business and law to core sciences and the humanities — our experts are helping make Emory a force for good, ethical AI and staying one step ahead of our would-be robot overlords.

Here, according to some of Emory’s leading thinkers in AI, are a few of the latest trends you need to know about.

RISE OF THE CHATBOTS

Part of the reason AI has so seamlessly (and unassumingly) integrated into our world is that, to date, the technology has functioned quietly in the background. The algorithms that curate our news feeds and monitor our bank accounts toil out of sight, out of mind. But some new applications in machine learning are proving much harder to ignore. And few are as in-your-face as ChatGPT.

Released in November 2022, ChatGPT (Generative Pretrained Transformer) is a chatbot developed by OpenAI, the latest and most well-known in a line of large language models that trains itself on the text and information on the internet to produce original and strikingly human-like sentences. It can answer questions, give suggestions, write letters and stories, and even conduct a human-like conversation. In other words, ChatGPT has given AI a voice — and some people are unnerved by what it has to say.

“It is both more and less than people think it is,” says Paul Root Wolpe, director of the Emory Center for Ethics. “It’s an information-generating technology. What it doesn’t have is consciousness or sensitivity or judgment. The issue is the use and misuse of it.”

Since ChatGPT (and even more recent platforms developed by Google and Microsoft) is a communication tool, its impact can be felt in pretty much any field. One sector that has seen an immediate disruption is higher education, where students could use the tech to answer essay questions and author papers that are almost impossible for professors to distinguish from original works. That really hits home at a university like Emory.

“The point of college assignments is not to find the right answers, it’s to go through the process of thinking about the questions,” says Chinmay Kulkarni, associate professor of computer science at Emory College of Arts and Sciences. “Those students are cheating themselves out of an opportunity to think for themselves.”

(Speaking of thinking for themselves, some enterprising young minds have already developed tech to help teachers identify AI-crafted work.)

Another issue is the fact that ChatGPT is trained on information from all over the internet, meaning that its output may be rife with all the web’s inaccuracies, biases, and misinformation. The app can also sometimes generate completely fabricated data and sources. This is especially troubling to thinkers in the medical field.

“My concern is that people will start to look at these tools as virtual physicians and start to follow their recommendations,” says Anant Madabhushi, professor of biomedical engineering at Walter H. Cpulter School of Biomedical Engineering and Emory School of Medicine. “There could be severe consequences there.”

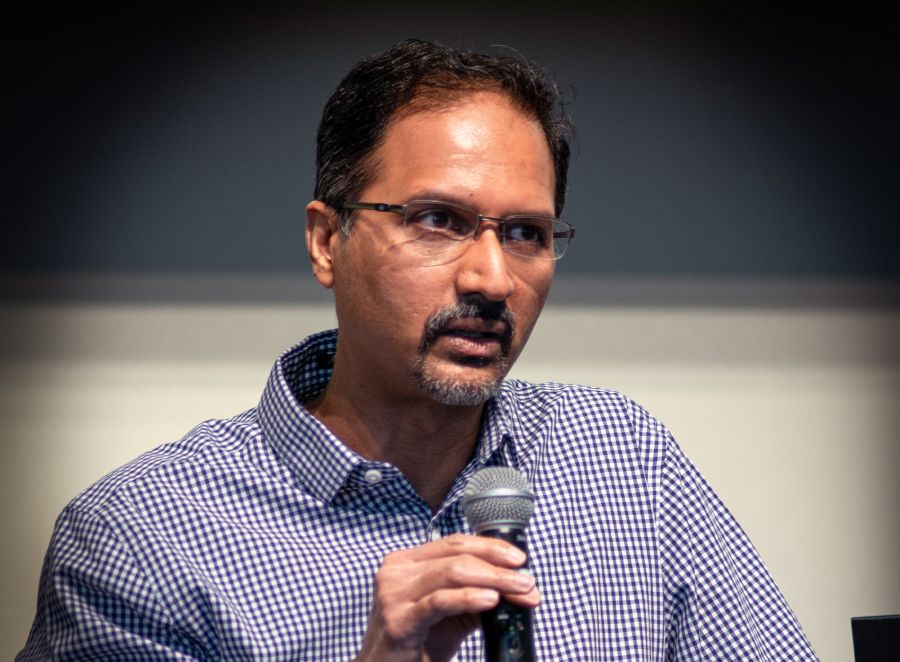

Such scenarios also present myriad legal questions. Who is responsible when potentially dangerous misinformation is disseminated? And since ChatGPT is drawing from existing sources, what happens when the app incidentally produces a work — like an essay or a book or even a poem — that mimics someone else’s intellectual property a little too closely? What constitutes fair use?

“The legal issues are many,” says Matthew Sag, professor at Emory School of Law. “Is it really lawful to use content you just found on the web to train a model to produce new content? And when ChatGPT produces it, who owns it?”

Matthew Sag, professor at Emory School of Law

Matthew Sag, professor at Emory School of Law

Despite these concerns, the Emory experts all expressed optimism that, despite a few bad actors, ChatGPT and similar tech could be a force for good, freeing humans of menial tasks to focus on bigger questions that, for the moment at least, only we can answer.

For instance, you could use the chatbot to brainstorm approaches to a school paper, code a website, or even outline a journalistic story that you could then edit, refine, and rewrite, saving hours of time and frustration. It could also be employed to draft rote messaging — such as marketing copy, formulaic press releases, and even emails — allowing us to dedicate more time to truly creative endeavors.

“I sometimes ask my students to log everything they use a smartphone for and then ask, ‘Is it replacing someone’s job?’ ‘Did it create a new job?’ or ‘Did it change the job in some complex way?’” says Kulkarni. “More often than not, it’s the latter. And it’s the same with ChatGPT. It’s going to transform current ways of working.”

Lauren Klein, Winship Distinguished Research Professor and associate professor of English and quantitative theory and methods

Lauren Klein, Winship Distinguished Research Professor and associate professor of English and quantitative theory and methods

Lauren Klein, Winship Distinguished Research Professor and associate professor of English and quantitative theory and methods, studies the intersection of the humanities and data sets. While she’s excited about the ways AI could make our lives and jobs easier, she’s also worried that left unchecked the tech could magnify the sexism, racism, and other inequities already rampant on the internet. “The challenge will be how to ensure that technologies like ChatGPT improve the possibilities and conditions of work rather than lead to additional inequality,” Klein says.

Learn more about AI and how it could impact higher education at the Artificial Intelligence and the Future of Higher Education online panel discussion on Friday, March 24 from noon to 1:30 p.m. Provost Ravi V. Bellamkonda will open the webinar, which will include panelists Lauren Klein and Matthew Sag. Registration is required for this free Zoom-based event.

Asking ChatGPT the Question We All Want to Know

Emory Magazine: Are you good or evil?

ChatGPT: As an artificial intelligence language model, I do not have the ability to be good or evil, as I am not capable of having moral values or intentions. My purpose is to assist and provide information to the best of my ability, based on the data and information that has been input into my programming. I do not have the capability to make decisions or take actions on my own, as I am solely an automated system designed to provide responses to user input. Ultimately, the way that I am used is determined by the individuals who interact with me. It is up to humans to decide how they will use technology, including AI, and to ensure that its use aligns with ethical principles and values.

TARGETED CREATIVITY

Just as ChatGPT has accelerated AI-generated text, there are other applications that have been trained on web-based material to do the same with producing original images (DALL-E or Starry AI) and sound (Murf.AI and Speechify). It seems only natural that these technologies would merge to create multimedia creative content, including movies, shows, and, of course, commercials of all shapes and sizes.

And when you combine this integrated multisensory approach with the vast amount of personal consumer data already being mined and used in advertising, entire individualized ad campaigns might not be far away. “The cost of creating visual content — packaging, story boards, etc. — has just plummeted,” says David Schweidel, professor of marketing at Goizueta Business School. “And the speed at which it can be done is astonishing. In the time it took to generate one campaign, I could now create 20. And I can target that audience.”

ONE-SHOT LEARNING

Part of the wonder around learning models like ChatGPT is the sheer vastness of data on which they are training. In fact, the more sources these information generators can process, the more detailed and unique their output becomes. But there is also a movement toward severely limiting the size of data sets used to train these algorithms in order to produce a faster and more specific result.

For example, say you’re trying to build an image recognition model to distinguish huskies from wolves. Instead of feeding the program hundreds or thousands of examples of each species, you train the neural network on a single image of each. This not only speeds up both training the model and producing a result, but it can also prove invaluable when trying to detect something for which there aren’t a lot of examples, such as complex biomedicine.

Anant Madabhushi, professor of biomedical engineering at Walter H. Cpulter School of Biomedical Engineering and Emory School of Medicine.

Anant Madabhushi, professor of biomedical engineering at Walter H. Cpulter School of Biomedical Engineering and Emory School of Medicine.

“That’s really important in cases of rare diseases where you don’t have a lot of data to work with,” says Emory School of Medicine’s Madabhushi. “Medicine is so much more complex than, say, driving a car. I don’t know to what extend single-shot learning will have an impact on biomedicine. But it’s very exciting.”

PERSONALIZED AND PREDICTIVE MEDICINE

Speaking of medicine, AI has long been present in our hospitals. There are already algorithms that scan X-rays for abnormalities. Ones that help perform robot-assisted surgery. And neural networks that automate administrative tasks like ordering blood tests and processing bills. But one of the hottest trends is precision medicine: Using AI to customize health care to each individual patient.

Before, everyone who showed certain symptoms received a uniform treatment. “Now, in a short period of time, you can evaluate the patient on individual information like genetics and genomics,” says Emma Zhang, associate professor of information systems and operations management at Goizueta Business School. “You can then formulate a highly personalized treatment.”

Emma Zhang, associate professor of information systems and operations management

Emma Zhang, associate professor of information systems and operations management

At the same time, health care professionals can use that same information, along with broader data like ethnicity, gender, age, and even social determinants like income and geographic location to train algorithms that predict individual response to certain drugs or treatments.

“Every patient’s biology is different,” says Hui Shao, associate professor at Rollins School of Public Health. “Causal AI can help us understand the variations and identify a cluster of people who might be highly respondent to certain treatments so they can receive the highest level of benefit.”

Want to know more?

Please visit Emory Magazine, Emory News Center, and Emory University.